The Software Platform for Enterprise Generative AI

NVIDIA AI Enterprise is a cloud-native platform that accelerates data pipelines and enables secure, streamlined deployment of generative AI applications, ensuring smooth transitions from prototype to production.

Optimize Performance

NVIDIA NIM and CUDA-X microservices provided an optimized runtime and easy to use building blocks to streamline generative AI development.

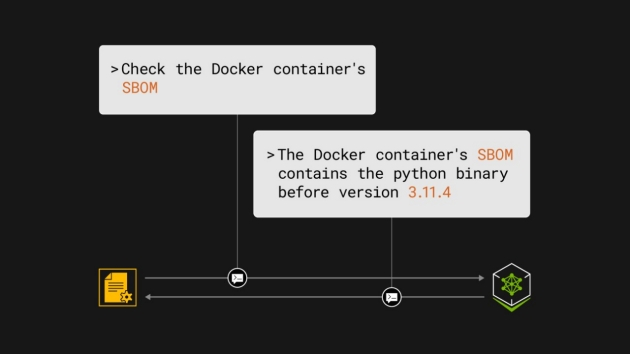

Deploy With Confidence

Protect company data and intellectual property with ongoing monitoring for security vulnerabilities and ownership of model customizations.

Run Anywhere

Standards-based, and containerized microservices are certified to run on the cloud, in the data center, and on workstations.

Enterprise-Grade

Predictable production branches for API stability, management software, and NVIDIA Enterprise Support helps keep projects on track.

Inference for Every AI Workload

Run inference on trained machine learning or deep learning models from any framework on any processor—GPU, CPU, or other—with NVIDIA Triton™ Inference Server. Part of the NVIDIA AI platform and available with NVIDIA AI Enterprise, Triton Inference Server is open-source software that standardizes AI model deployment and execution across every workload.

Deploying, Optimizing, and Benchmarking LLMs

Get step-by-step instructions on how to serve large language models (LLMs) efficiently using Triton Inference

Server.

What Is the NVIDIA TAO?

Eliminate the need for mountains of data and an army of data scientists as you create AI/machine learning models

and speed up the development process with transfer learning. This powerful technique instantly transfers learned features from an existing neural network model to a new customized one.

The open-source NVIDIA TAO, built on TensorFlow and PyTorch, uses the power of transfer learning while simultaneously simplifying the model training process and optimizing the model for inference throughput on practically any platform. The result is an ultra-streamlined workflow. Take one of the pretrained models, adapt them to your own real or synthetic data, then optimize for inference throughput. All without needing AI expertise or large training datasets.