NVIDIA Isaac

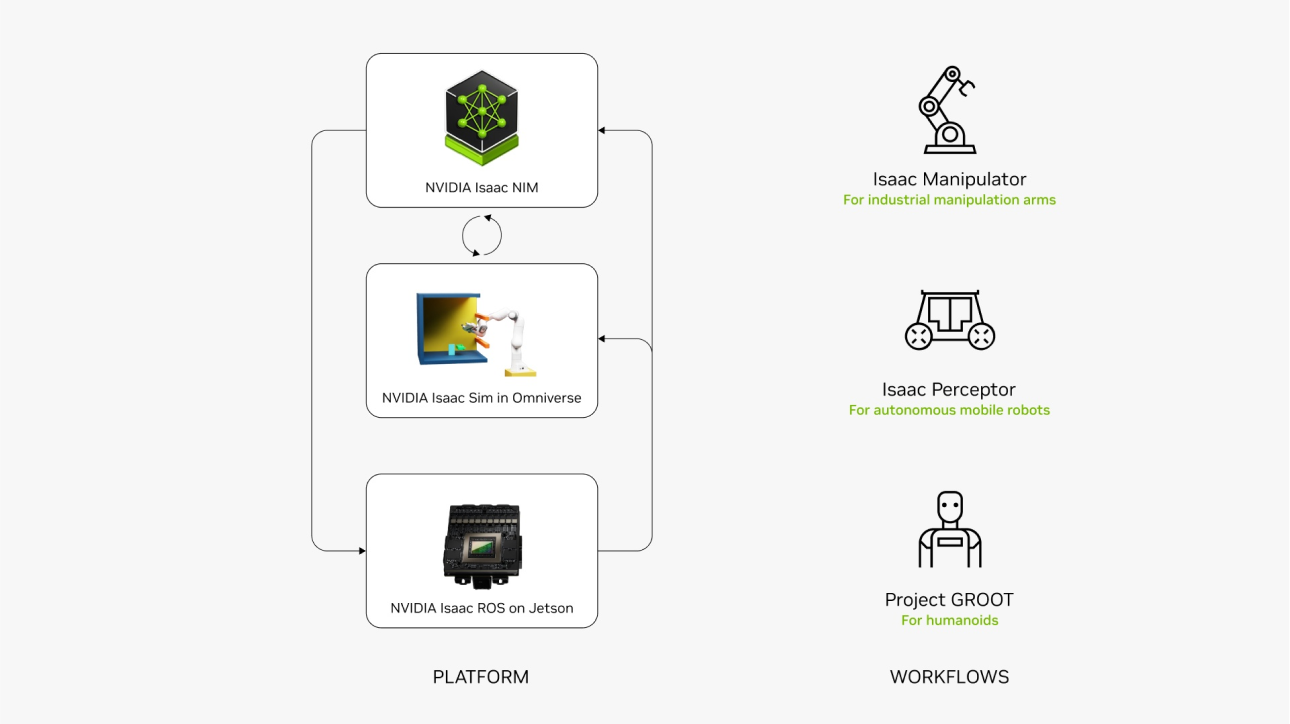

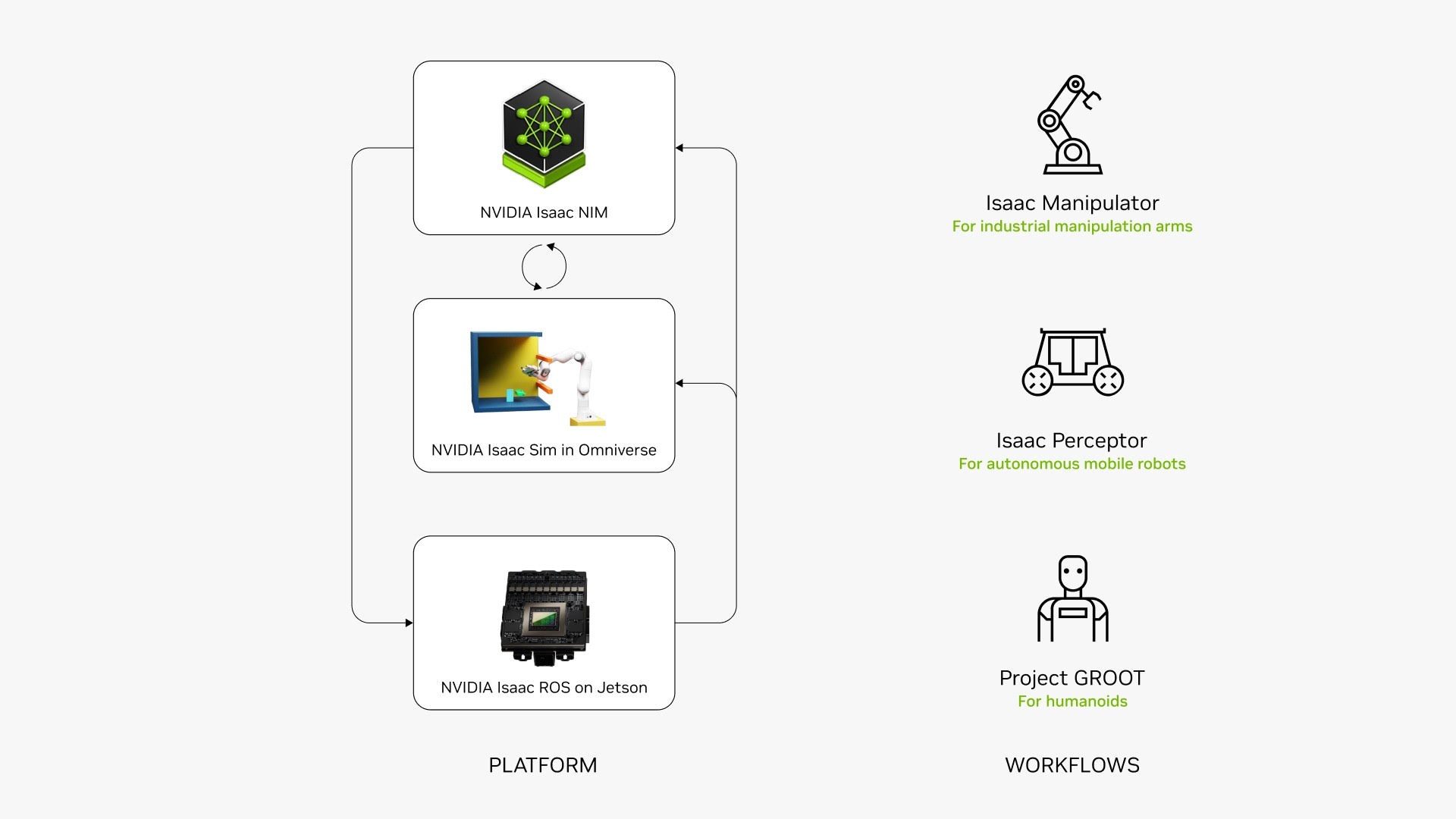

The NVIDIA Isaac™ AI robot development platform consists of NVIDIA-accelerated libraries, application frameworks, and AI models that accelerate the development of AI robots such as autonomous mobile robots (AMRs), arms and manipulators, and humanoids.

NVIDIA Isaac Libraries and AI Models

NVIDIA Robotics full-stack, acceleration libraries, and optimized AI models give you a better, more efficient way to develop, train, simulate, deploy, operate, and optimize robot systems.

-

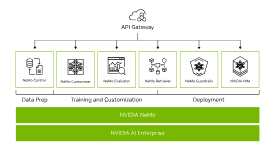

AI Foundry

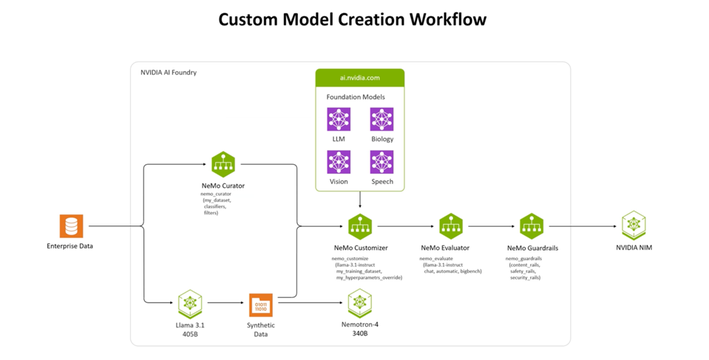

NVIDIA AI Foundry is a platform and service for building custom generative AI models with enterprise data and domain-specific knowledge. Just as TSMC manufactures chips designed by other companies, NVIDIA AI Foundry enables organizations to develop their own AI models.

A chip foundry provides state-of-the-art transistor technology, manufacturing process, large chip fabs, expertise, and a rich ecosystem of third-party tools and library providers. Similarly, NVIDIA AI Foundry includes NVIDIA-created AI models like Nemotron and Edify, popular open foundation models, NVIDIA NeMo™ software for customizing models, and dedicated capacity on NVIDIA DGX™ Cloud—built and backed by NVIDIA AI experts. The output is NVIDIA NIM™—an inference microservice that includes the custom model, optimized engines, and a standard API—which can be deployed anywhere.

NVIDIA AI Foundry and its libraries are integrated into the world’s leading AI ecosystem of startups, enterprise software providers, and global service providers. -

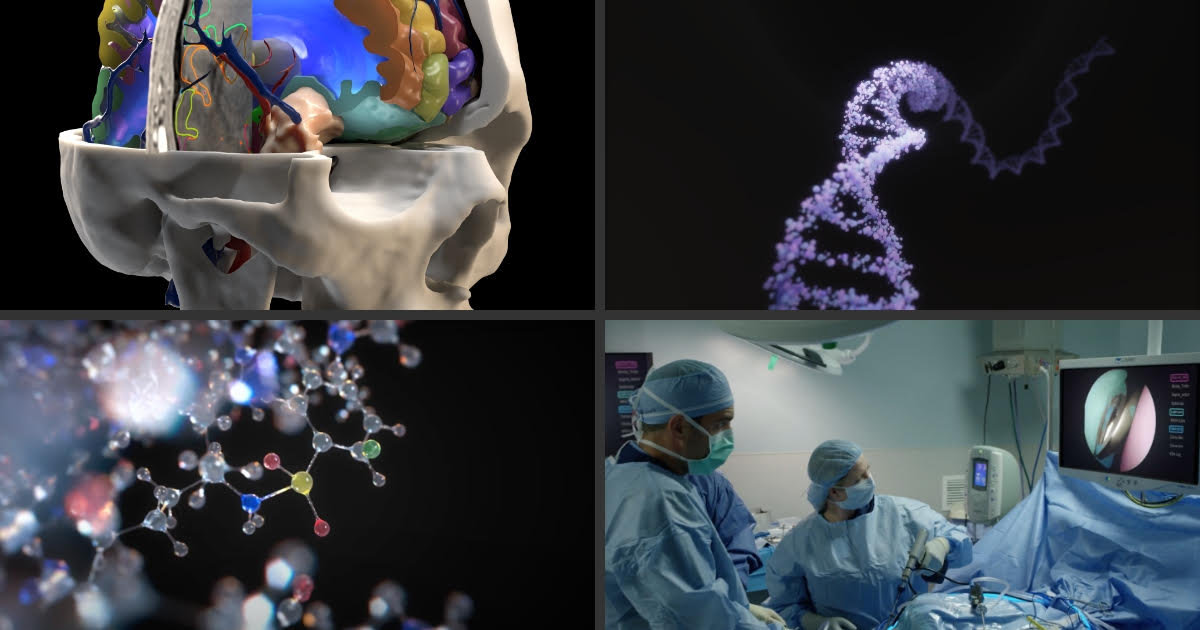

CLARA

NVIDIA® Clara™ is a platform of AI applications and accelerated frameworks for healthcare developers, researchers, and medical device makers creating AI solutions to improve healthcare delivery and accelerate drug discovery. Clara’s domain-specific tools, AI pre-trained models, and accelerated applications are enabling AI breakthroughs in numerous fields, including genomics, natural language processing (NLP), imaging, medical devices, drug discovery, and smart hospitals. -

Generative AI

NVIDIA AI is the world’s most advanced platform for generative AI and is relied on by organizations at the forefront of innovation. Designed for the enterprise and continuously updated, the platform lets you confidently deploy generative AI applications into production, at scale, anywhere.

Generative AI Across Industries: -

CUDA-X

Developers, researchers, and inventors across a wide range of domains use GPU programming to accelerate their applications. Developing these applications requires a robust programmimicroservicest with highly optimized, domain-specific microservices and libraries. NVIDIA CUDA-X, built on top of CUDA®, is a collection of microservices, libraries, tools, and technologies for building applications that deliver dramatically higher performance than alternatives across data processing, AI, and high performance computing (HPC). -

VIA

What Is A Visual AI Agent?

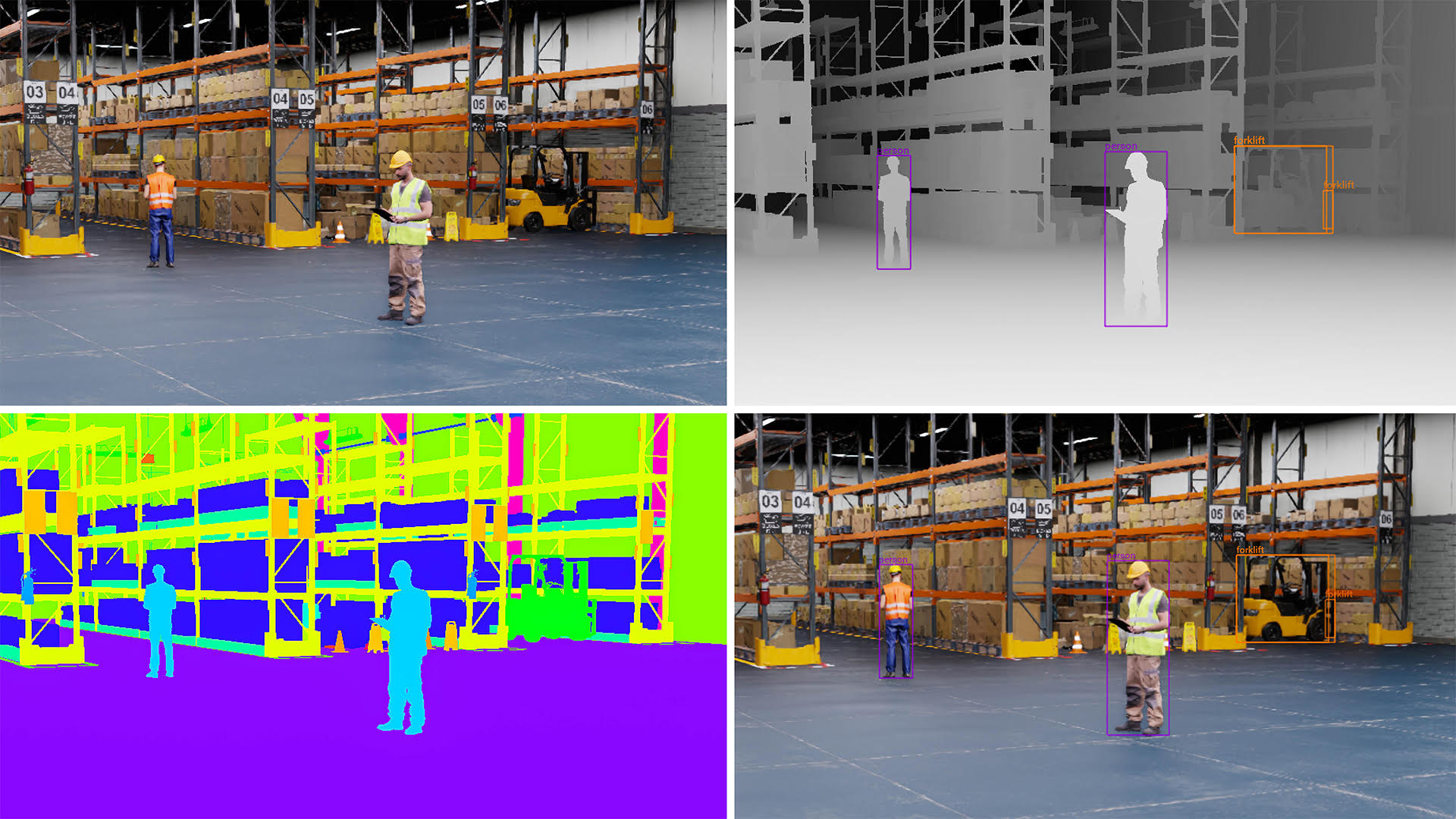

A visual AI agent can combine both vision and language modalities to understand natural language prompts and perform visual question-answering. For example, answering a broad range of questions in natural language that can be applied against a recorded or live video stream. This deeper understanding of video content enables more accurate and meaningful interpretations, improving the functionality of video analytics applications and the interpretation of real-world scenarios. These agents promise to unlock entirely new industrial application possibilities.

Streamline Every Industrial Operation

Highly perceptive, accurate, and interactive visual AI agents will be deployed throughout our factories, warehouses, retail stores, airports, traffic intersections, and more. This will have a tremendous impact on operations teams looking to make better decisions using richer insights generated from natural interactions. Managers and opermicroserviceswill communicate with these agents in natural language, all powered by generative AI and large Vision Language Models with NVIDIA NIM™ microservices at their core. -

NIM

Accelerate Your AI Deployment With NVIDIA NIM

NVIDIA NIM™, part of NVIDIA AI Enterprise, provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models across clouds, data centers, and workstations. Upon deployment with a single command, NIM microservices expose industry-standard APIs for simple integration into AI applications, development frameworks, and workflows. Built on pre-optimized inference engines from NVIDIA and the community, including NVIDIA® TensorRT™ and TensorRT-LLM, NIM microservices automatically optimize response latency and throughput for each combination of foundation model and GPU system detected at runtime. NIM containers also provide standard observability data feeds and built-in support for autoscaling on Kubernetes on GPUs. -

NeMo

NVIDIA NeMo™ is an end-to-end platform for developing custom generative AI—including large language models (LLMs), multimodal, vision, and speech AI —anywhere. Deliver enterprise-ready models with precise data curation, cutting-edge customization, retrieval-augmented generation (RAG), and accelerated performance. NeMo is a part of the NVIDIA AI Foundry, a platform and service for building custom generative AI models with enterprise data and domain-specific knowledge. -

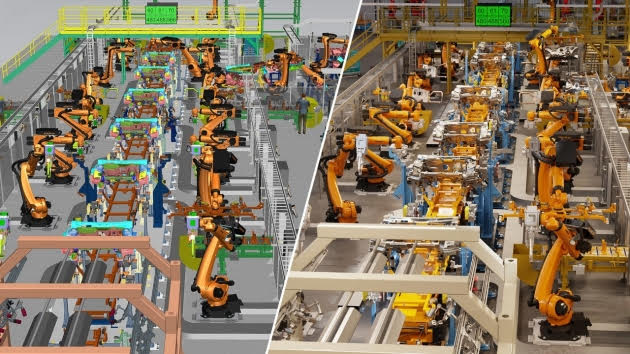

Digital Twin

The world’s enterprises are racing to digitalize and become software-defined. With NVIDIA Omniverse™, NVIDIA AI, and OpenUSD, developers are building a new era of digital twins to design, simulate, operate, and optimize their products and production facilities. Once born, these digital twins become the testing grounds for generative physical AI to power autonomous systems. -

Omniverse Replicator

Omniverse Replicator is a framework for developing custom synthetic data generation pipelines and services. Developers can generate physically accurate 3D synthetic data that serves as a valuable way to enhance the training and performance of AI perception networks used in autonomous vehicles, robotics and intelligent video analytics applications -

Omniverse

NVIDIA Omniverse™ is a platform of APIs, SDKs, and services that enable developers to easily integrate Universal Scene Description (OpenUSD) and NVIDIA RTX™ rendering technologies into existing software tools and simulation workflows for building AI systems.

Easily Customize and Extend

Develop new tools and workflows from scratch with low- and no-code sample apps and easy-to-modify extensions with Omniverse SDKs.

Enhance Your 3D Applications

Supercharge your existing software tools and applications with OpenUSD, RTX, accelerated computing, and generative AI technologies through Omniverse Cloud APIs.

Deploy Anywhere

Develop and deploy custom applications on RTX-enabled workstations or virtual workstations, or host and stream your application from Omniverse Cloud. -

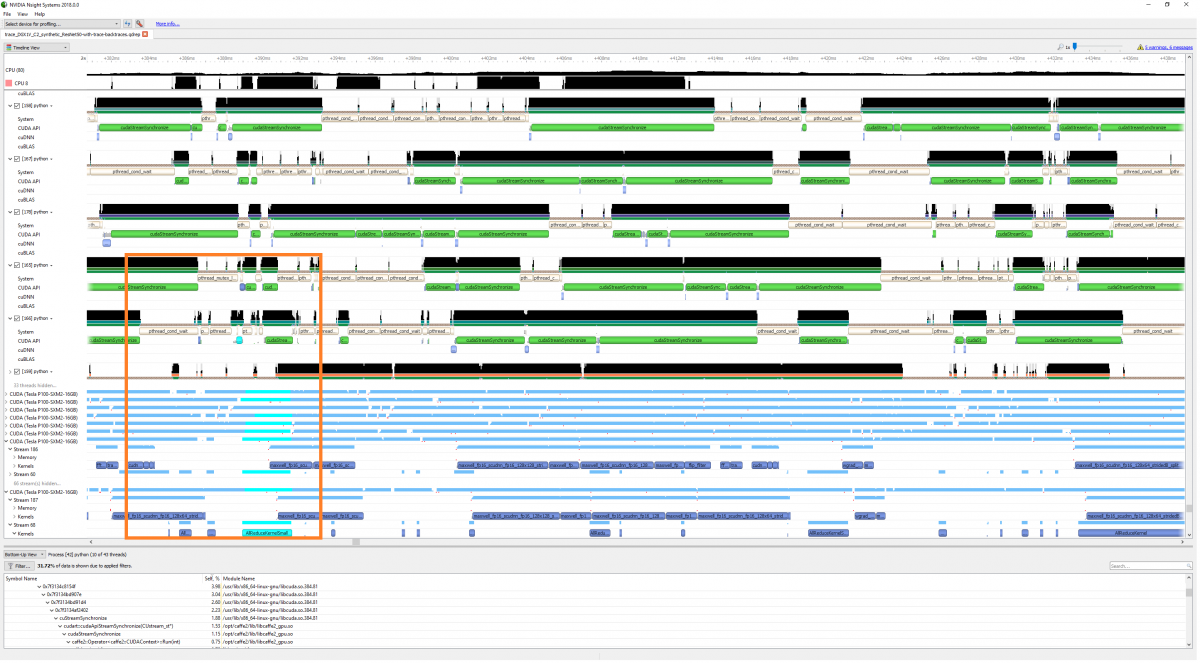

Nsight Graphics

Nsight Systems is a low overhead system-wide profiling tool, providing the insights developers need to analyze and optimize software performance. It uses GPU tracing, CPU sampling and tracing, and OS thread state tracing to visualize an application’s algorithms, helping developers identify the largest opportunities to optimize their code. -

Nsight Systems

Nsight Systems is a low overhead system-wide profiling tool, providing the insights developers need to analyze and optimize software performance. It uses GPU tracing, CPU sampling and tracing, and OS thread state tracing to visualize an application’s algorithms, helping developers identify the largest opportunities to optimize their code. -

Isaac Manipulator

Isaac Manipulator

The Isaac Manipulator workflow is built on Isaac ROS, letting you build AI-enabled robot arms—or manipulators—that can seamlessly perceive, understand, and interact with their environments. -

Isaac Perceptor

NVIDIA Isaac Perceptor

Isaac Perceptor is a workflow built on Isaac ROS that lets you quickly build robust autonomous mobile robots (AMRs) that can perceive, localize, and operate in unstructured environments like warehouses or factories. -

Isaac ROS

NVIDIA Isaac ROS

Isaac ROS is built on the open-source ROS 2 (Robot Operating System) software framework. This means the millions of developers in the ROS community can easily take advantage of NVIDIA-accelerated libraries and AI models to accelerate their AI robot development and deployment workflows. -

Isaac SIM

Design, simulate, test, and train your AI-based robots and autonomous machines in a physically based virtual environment.

NVIDIA Isaac Sim

The NVIDIA Isaac Sim™, built on NVIDIA Omniverse™, helps you design, simulate, test, and train AI-based robots and autonomous machines in a physically based virtual environment. -

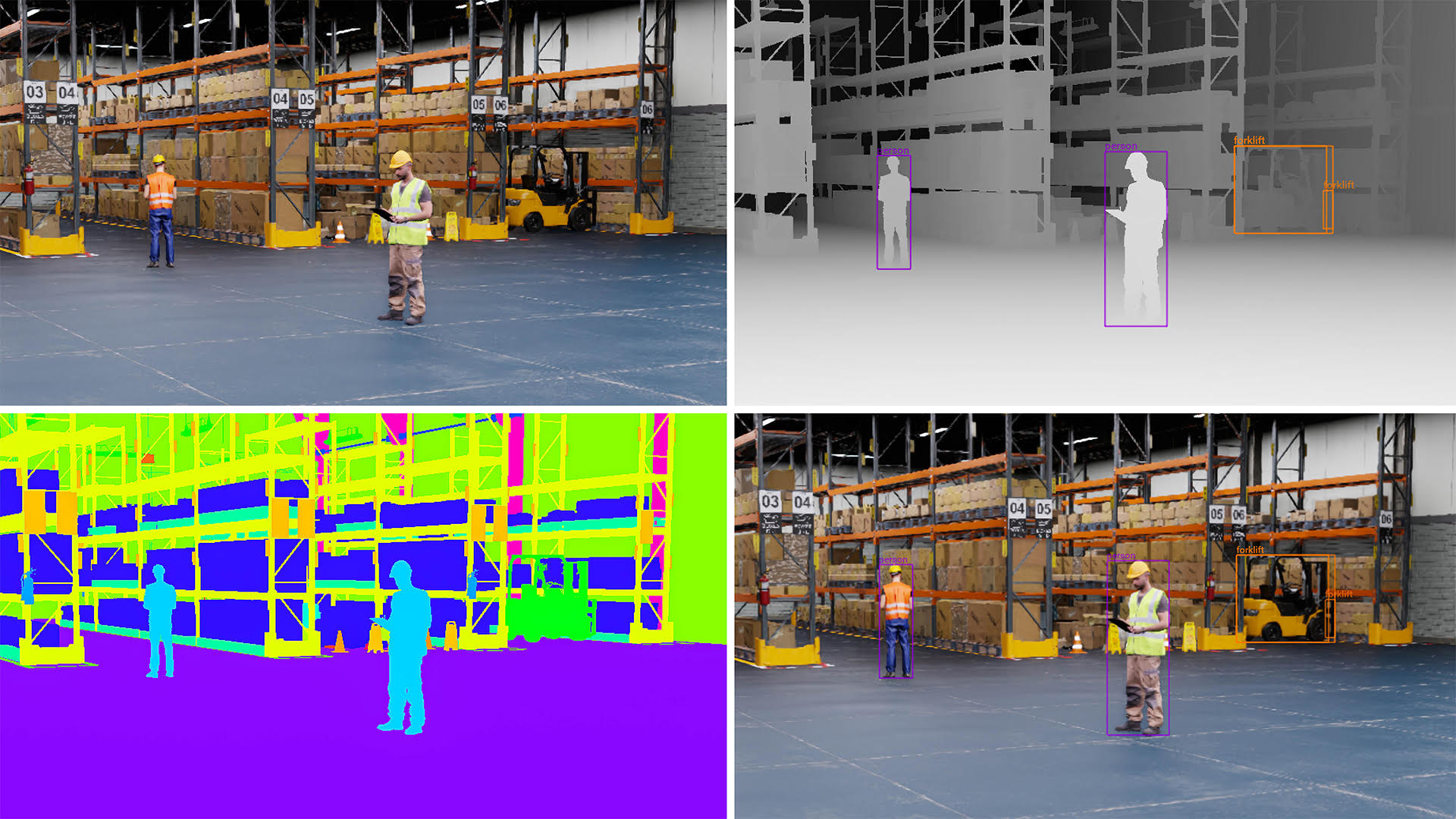

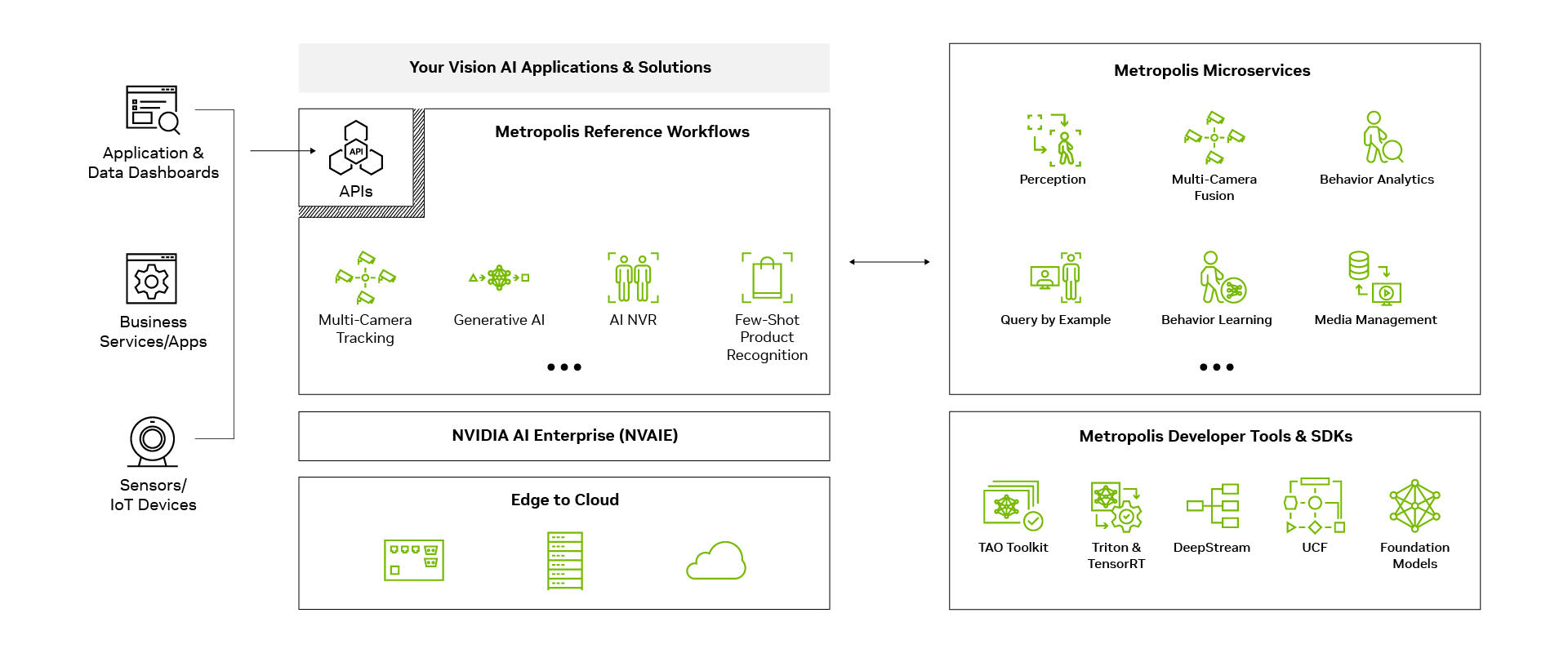

Metropolis Microservices

Ready to accelerate your vision AI journey from the edge to any cloud? This suite of powerful cloud-native microservices and reference applications will help you fast-track the development and deployment of your vision AI applications. -

Isaac

The NVIDIA Isaac™ AI robot development platform consists of NVIDIA-accelerated libraries, application frameworks, and AI models that accelerate the development of AI robots such as autonomous mobile robots (AMRs), arms and manipulators, and humanoids. -

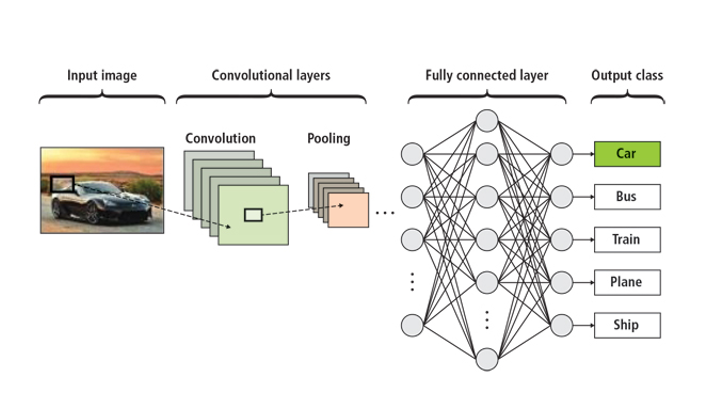

TensorRT

NVIDIA® TensorRT™ is an ecosystem of APIs for high-performance deep learning inference. TensorRT includes an inference runtime and model optimizations that deliver low latency and high throughput for production applications. The TensorRT ecosystem includes TensorRT, TensorRT-LLM, TensorRT Model Optimizer, and TensorRT Cloud. -

DeepStream

NVIDIA’s DeepStream SDK is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services.

You can now create stream-processing pipelines that incorporate neural networks and other complex processing tasks like tracking, video encoding/decoding, and video rendering. These pipelines enable real-time analytics on video, image, and sensor data. -

Triton

NVIDIA Triton™ Inference Server, part of the NVIDIA AI platform and available with NVIDIA AI Enterprise, is open-source software that standardizes AI model deployment and execution across every workload. Triton Inference Server enables teams to deploy any AI model from multiple deep learning and machine learning frameworks, including TensorRT, TensorFlow, PyTorch, ONNX, OpenVINO, Python, RAPIDS FIL, and more. Triton supports inference across cloud, data center, edge and embedded devices on NVIDIA GPUs, x86 and ARM CPU, or AWS Inferentia. Triton Inference Server delivers optimized performance for many query types, including real time, batched, ensembles and audio/video streaming. Triton inference Server is part of NVIDIA AI Enterprise, a software platform that accelerates the data science pipeline and streamlines the development and deployment of production AI. -

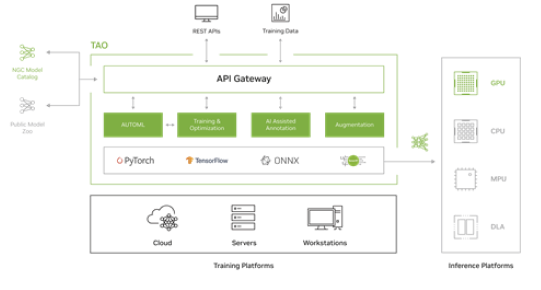

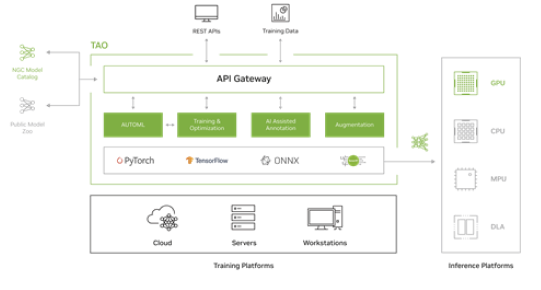

TAO

Looking for a faster, easier way to create highly accurate, customized, and enterprise-ready AI models to power your vision AI applications? The open-source TAO for AI training and optimization delivers everything you need, putting the power of the world’s best Vision Transformers (ViTs) in the hands of every developer and service provider. You can now create state-of-the-art computer vision models and deploy them on any device—GPUs, CPUs, and MCUs—whether at the edge or in the cloud. -

JETPACK

NVIDIA JetPack SDK powering the Jetson modules is the most comprehensive solution for building end-to-end accelerated AI applications, significantly reducing time to market. NVIDIA JetPack includes 3 components: Jetson Linux: A Board Support Package (BSP) with bootloader, Linux kernel, Ubuntu desktop environment, NVIDIA drivers, toolchain and more. It also includes security and Over-The-Air (OTA) features. Jetson AI Stack: CUDA Accelerated AI stack which includes a complete set of libraries for acceleration of GPU computing, multimedia, graphics, and computer vision. It supports application frameworks such as Metropolis to build, deploy and scale Vision AI application, Isaac for building high performance robotic applications and Holoscan for building high performance computing applications (HPC) with real time insights and sensor processing capabilities from edge to cloud. Jetson Platform Services: A collection of ready to use services to accelerate AI application development on Jetson.

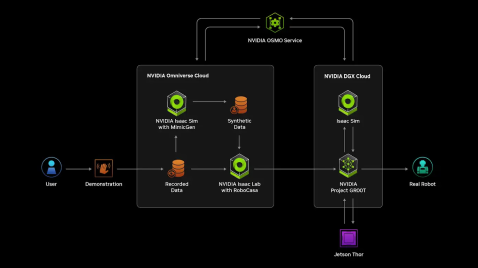

Simulation and Robot Learning

Design, simulate, test, and train your AI-based robots and autonomous machines in a physically based virtual environment.

NVIDIA Isaac Lab

This lightweight sample application is built on Isaac Sim and optimized for robot learning that’s critical for robot foundation model training.

NVIDIA Isaac Sim

The NVIDIA Isaac Sim™, built on NVIDIA Omniverse™, helps you design, simulate, test, and train AI-based robots and autonomous machines in a physically based virtual environment.

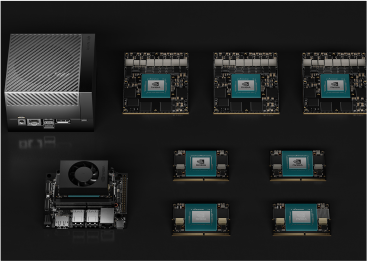

NVIDIA-Accelerated Systems

NVIDIA’s three computing platforms streamline and accelerate developer workflows: NVIDIA DGX™ systems for building robotics AI models, NVIDIA OVX™ for simulating, testing, and training them, and NVIDIA AGX™ for deploying and running them.

-

NVIDIA DGX

The NVIDIA DGX platform combines the best of NVIDIA software and infrastructure. It’s ideal for training multi-modal foundational models for robots. -

NVIDIA OVX

NVIDIA OVX systems provide industry-leading graphics and compute performance to accelerate the next generation of robotics. -

NVIDIA AGX

NVIDIA AGX Systems, including NVIDIA Jetson™, offer high performance and energy efficiency, making them the leading platform for robotics. Trained, tested, and optimized robot AI models are deployed to these systems for real-world operation. -

NVIDIA OSMO

NVIDIA OSMO is a cloud-native workflow orchestration platform that lets you easily scale your workloads across distributed environments—from on-premises to private and public cloud resource clusters.